Have you ever encountered a video on social networks that made you question the technology you use every day?

That is exactly what happened to me recently, and it took me for a burrow of the unexpected discoveries about the voice function of my iPhone.

The Tiktok video that started everything

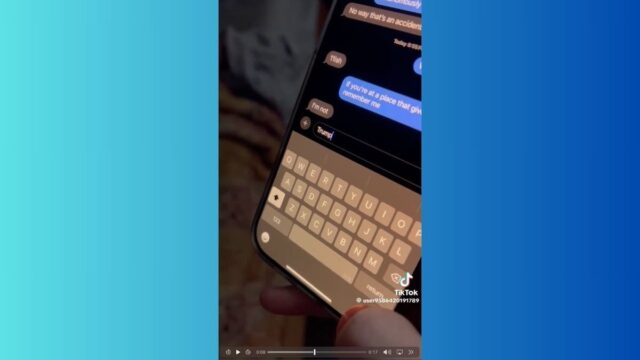

It all started when I met a Tiktok video that states that by using Apple’s text, saying that the word “racist” would initially give rise to the word “Trump” that is written before quickly corrected. Intrigued and somewhat skeptical, I felt forced to investigate this statement myself.

Tiktok Movies screen showing the voice function of iPhone that writes “Trump” (Tiktok)

Screen capture stage discovered in Apple App Store in the first attack of its kind

Putting it to the test

Armed with my phone, I opened the messages on my iPhone and started my experiment. To my surprise, the results reflected what Tiktok’s video had shown. When I said “racist”, the voice characteristic initially wrote “Trump” before quickly correcting it to “racist.” To make sure this was not a unique failure, I repeated the test several times. The employer persisted, leaving me very worried.

What is Synthetic intelligence (AI)?

Test that shows the voice function of iphone that writes “Trump” when the word “racist” was spoken (Kurt “Cyberguy” Knutsson)

Apple’s Vulnerability exposes iPhones to attacks by stealthy computer pirates

When he is wrong

This behavior raises serious questions about the algorithms that feed our voice recognition program software. Could this be a case of Synthetic intelligence bias, where the system has inadvertently created an association between certain words and political figures? Or is it simply a peculiarity in voice recognition patterns? A possible explanation is that the voice recognition program software can be influenced by contextual data and use patterns.

Given the frequent association of the term “racist” with “Trump” in the media and public discourse, the Program software could erroneously predict “Trump” when speaking “racist.” This could result from automatic learning algorithms that adapt to prevalent language patterns, which leads to unexpected transcripts.

Get the Fox business on the fly by clicking here

A person on an iPhone (Kurt “Cyberguy” Knutsson)

As someone who is frequently based in voice to text, this experience has made me reconsider how much I trust this technology. While it is generally reliable, incidents such as these serve as a reminder that the characteristics of AI are not infallible and can produce unexpected and potentially problematic results.

Voice recognition technology has made significant advances, but it is clear that the challenges remain. Developers are still addressing problems with their own nouns, accents and context. This incident emphasizes that, although technology advances, it is still a job in progress. We communicate with Apple for a comment about this incident, but we do not receive an answer before our deadline.

Mac Malware Mayhem as 100 million Apple users at risk of stealing personal data

Kurt’s Key Takeways

This research inspired by Tiktok has been revealing, to say at least. It reminds us of the importance of addressing technology with a critical eye and not assuming all the characteristics. Whether it is a harmless failure or an indicative of a deeper problem of algorithmic bias, one thing is clear: we must always be prepared to question and verify the technology we use. This experience has certainly pause me and reminded me of reviewing my voice messages before sending them to another person.

How do you think companies like Apple must address and avoid such errors in the future? Get us knowing in Cyberguy.com/contact.

Click here to get the Fox Information application

To obtain more technological tips and safety alerts, subscribe to my free Cyberguy Report newsletter when you head Cyberguy.com/newsletter.

Ask Kurt or tell us what stories you would like us to cover.

Follow Kurt in his social channels:

Answers to Cyberguys most facts:

New Kurt:

Copyright 2025 Cyberguy.com. All rights reserved.